Data within tDAR is primarily modeled through the java bean structure, with a series of primary and secondary entities. Primary entities include resources, creators, keywords, and resource collections. Other entities tend to be relationships between primary entities or properties of them. Creators, resources, and keywords are all hierarchical entities which implement inheritance. Inheritance in these cases help us manage common fields, and simplify data management.

The tDAR data model (bean model) is built around the needs of expressing and managing data about archaeological information and managing administrative information. At the center of the model is the Resource. Although Resource is not an abstract class, it is never explicitly instantiated – due to some functional requirements with Hibernate and Hibernate Search, it cannot be abstract. Resources are split into two categories, those with files, and those without. Projects, resources without files, exist to help with data management for multiple resources. InformationResource objects, resources with files, exist in a number of forms – Document, Dataset, Image, and supporting formats (Coding Sheet, and Ontology). InformationResource beans may be part of a Project. Resources can be managed and organized through ResourceCollection objects and are described via various Keyword Objects. Resources are also related to Creators (People and Institutions) through both rights and other roles.

Figure 1: tDAR Resource Class Hierarchy

Figure 2: tDAR Keyword Class Hierarchy

The inheritance and relationships are managed by JPA 2.0 and hibernate, as well as within the Java Bean Hierarchy. This affordance is likely necessary in the code, but does complicate some of the hibernate interactions. At the center of the data model are a set of interfaces and static classes that centralize and manage

There are effectively three separate serialized data models for tDAR: the SQL database through hibernate, the Lucene indexes through hibernate search, Java objects through Freemarker, and XML and JSON through JAXB (mainly).

These serializations provide both benefits and complexities to tDAR. There is both the challenge of keeping the data in sync across all representations, but also filtering data that may not be appropriate to that context, or that the user does not have the rights to see.

The primary serialization or representation of tDAR is the Hibernate managed Postgres database. We leverage hibernate to manage the persistence and interaction with the database through JPA 2.0. Abstracting as much of the database interaction allows us to avoid as much Postgres specific database knowledge within the application layer. Hibernate nicely supports inheritance models within the database which allows us to manage and map tDAR objects into database objects and back. Hibernate also simplifies management of sets, lists, and object relationships in most places, thus simplifying the code that we have to generate.

The complexity to the tDAR object model does provide some challenges for hibernate. First is the issue of hash codes and identity. Due to how hash codes and identity are managed and generated within the system, there are no unique business keys that most objects can use as hash-code entries. Thus, we've taken the practice of using just the database generated id as the hash code where available. We've done this broadly throughout for consistency, even in a few cases where a hash code could have been generated from other values.

Another complexity is in querying and working with the object graph. The complex interrelationships between objects and object hierarchies in tDAR often mean that basic queries may return too much data, or suffer from N+1 issues. We have addressed these issues in a few ways, first, by using queries that utilize object constructors to produce "sparse" or "skeleton" records, or by severing bi-directional relationships and using secondary queries to populate data (an example of this being the relationship between projects and their resources, where a project does not have a relationship with each of its children, but the child has a relationship to its project). Moving forward, this type of issue represents one of our biggest performance bottlenecks. Some of this may be addressable using fetch profiles or other hibernate specific solutions.

A few other known issues with the database include, a few unique keys that cannot be represented in hibernate properly due to their multi-column nature. Better versioning of the database schema via tools like Liquibase. Better performance in general and better caching to name a few.

The data stored within the tdardata database represents a different class of data. This data is loaded from data sets within the system and cannot be properly managed by hibernate because schema are generated on the fly as a data set is loaded and each is not backed by java entities, but instead by simple data objects like lists or sets. This data is managed through Spring's JDBC support and through abstractions in the PostgresDatabase class. The main interaction with this data is for two purposes, simple browsing, and data integration.

A second class of database data that is not managed in hibernate is the PostGIS data. We use PostGIS to perform reverse geolocation within the application. This enables us to allow contributors to draw bounding boxes within the system, and be able to utilize the bounding data when other users search for terms. E.g. a bounding box around the UK would enable a user to search for "England" and find it without that user entering the geographic keyword.

This work (the geolocation) is done locally for a few reasons. 1) privacy and security of the lookup (as the data may be a confidential site location) 2) customization (as we can load in our own shape files to query with content unique to our clients). Currently data loaded into this database includes country, county, state, and continent data, but it might include state and federal lands and other information.

There are a number of different queries and functions that would be more complex if relegated to the database. These include some of the complex queries built by the advanced search interface, full text queries that use data outside the database, queries that use inheritance, or resources with hierarchical rights assignments, for example. We leverage Solr to manage the Lucene indexes for us and Lucene to run these queries. When a record is saved, we either manually, or use Spring Events to index and the updated bean. Lucene provides some nice benefits in allowing us to transforms the data that's stored and managed in a flat format that is more conducive to many searches.

The flatter indexes may prevent complex Boolean searches from being possible. Another challenge is that Lucene may not be as good at certain types of data queries, such as these that utilize numeric values. Spatial queries are particularly complex due to the bounding ranges. Lucene also gives us some benefits in being able to use query and data analyzers when working with data, so stemming, synonyms, and special character queries become easier.

One challenge with the database and Lucene split, however is the time between when the database change is made and when the index is updated. For large collections or projects, the permissions or values in the index may take more time to propagate than a second.

Moving forward, we may want to consider moving to a more schemaless solr model and trying to integrate the schema management into the app similar to how we handle liquibase.

The primary serialization model for beans is through Freemarker. Through struts, the beans the getters exposed on the controller are put onto the object stack for Freemarker. Freemarker can then iterate over and interact with the beans that are visible to populate XML or HTML as necessary. This model works quite nicely in many cases for us as it exposes and manages the beans elegantly. One challenge is that too much information can be exposed to the Freemarker layer in some cases. For example, if data needs to be obfuscated, such as Latitude or Longitude data, or if other information needs to be applied so the Freemarker layer can determine what to render. Ideally, model objects could be pruned, or managed prior to exposure to the Freemarker layer.

tDAR uses XML and JSON for serialization of data to internal and external sources. Originally, tDAR used xStream and Json-lib to manage serialization of data to XML and JSON respectively. Over time, we've removed xStream and replaced it with JAXB, and have moved away from Json-lib in place of Jackson, though more work needs to be done here.

XML Serialization was primarily for internal use and re-use, it facilitated messaging and transfer of data between different parts of the system. XML serialization also allowed for logging of complex objects such as data integration, record serialization for messaging, record serialization into the filestore as an archival representation, and the import API. JSON serialization was mainly for use and re-use of tDAR data by the JavaScript layer of the tDAR software.

Moving forward, there are a number of challenges to approach with XML and JSON serialization. As we move toward pure JAXB serialization of data for both JSON and XML, tDAR must tackle the fact that the different serializations have different data requirements. While the internal record serialization should contain all fields and all values, JSON serialization may want to or need to filter out data such as email addresses or personally identifiable information. XML that may be useful in a full record serialization may not be appropriate for data import (transient values, for example). Another challenge is maintaining the XML schema versioning in line with the data model changes as both need to be revised at the same time, and changes to the schema cause backwards compatibility issues with the XML in the filestore.

One option to tackle some of these issues might be to implement Jackson's serialization profiles for different formats to serialize the same JSON. Similarly, MoXy may be a better JAXB implementation than the default.

tDAR uses the Spring Integration framework to assist in management of dependencies and services throughout the application. In many case, these dependencies and services are simply academic in their "interchangeability." That is, most of our Dao's and services are pretty implementation specific, though we have swapped a few out over time. Spring mainly allows us to configure new services and existing services quickly and easily. As the application has grown however, one of the challenges is that our services are dependent on other services. Although we've attempted to keep this to a minimum, it does add complexity. A few different solutions have been chosen (1) using getters to access the shared service (e.g. getting the AuthenticationAndAuthorizationService from the ObfuscationService); (2) moving shared components into a new service with no dependencies; and, (3) moving logic into the Dao layer if necessary. As tDAR continues to grow, some of the autowiring logic may need to be reviewed and revisited.

tDAR does have a few "configurable" services that allow it to be extended to different working environments. These are built around external dependencies, specifically authentication and authorization through the "AuthenticationAndAuthorizationService" and DOI generation via the DOIService. These services has multiple Daos to back their features. For DOIs, this is one for EZID and one for ANDS. For authentication and authorization, we utilize a number of DAOs, different methods for connecting to Atlassian's Crowd, LDAP, and a few local "test" setups.

The final use for autowiring is for tracking all implementations of various interfaces. The primary example being the Workflows. This use allows us to make sure that all implemented Tasks, and Workflows are wired in together without having to explicitly manage the instances ourselves.

We have tried to adhere to the MVC model as closely as possible with a full Service and Dao layer to back up data access and management. tDAR is constructed using the Spring Integration framework, which allows for both hard-wiring and dynamic backing of services and dao layers into the system, thus allowing greater extensibility in the future. As the project has grown, the Service and Dao have grown in complexity and undergone a series of refactors to manage complexity, and to help with the IOC/Autowiring.

The goal of the first refactor, probably the most significant, was to manage duplication in the Dao and service layers. The challenge was maintaining duplicate, yet common methods for each of the services that backed each of the bean types; an additional challenge was that every bean required a Dao and Service regardless of whether it required unique functionality beyond some basic methods. This duplication enabled the potential for bugs to be increased whenever new beans were added. The first refactor adjusted the data and service layers to develop the GenericDao and GenericService objects. These provided a few distinct functions: a) they centralized all common functionality in a class that could be sub-classed or called by other Dao and Service layer objects, and (b) as they required a class to be passed to common methods like find(), they removed the need to create specific Service or Dao classes to cover common functionality, finally, c) as these had no dependencies, they alleviated a number of autowiring issues with Spring.

One challenge of this generic model, and how the tDAR Service and Dao layer are setup is that as they were migrated to use generics, flow of control became a bit more complicated. Specifically, the Dao layer can be used and re-used for different bean types. This becomes important and complicating as different beans may have different requirements or functionality around some basic methods. Two specific examples are those beans that implement the HasResource interface, and InformationResourceFileVersions. These two types of beans have unique deletion methods which require specific cases handled in the GenericDao.delete() method to override the default behavior of simply calling hibernate's delete method. We have attempted to maintain as little of this logic as possible.

In working with hibernate in general, we've attempted to keep as standard as possible, and as close to the JPA 2.0 standard as we can. A few additional issues or non-standard behaviors continue to remain within the system including: using the session vs. entity manager, using the database id for hashCode / equality, maintaining a few bidirectional relationships between entities (between InformationResourceFile and InformationResource, for example), and maintaining some database uniqueness keys that hibernate cannot completely manage (InformationResourceFileVersion).

A final challenge of Hibernate are the performance aspects of its inheritance model. Hibernate and JPA2 provides a few methods for dealing with inheritance and how it gets mapped to tables. Based on the distribution of data and our data model, we've chosen a model that uses the "joined" inheritance where subclasses have their own tables but they only contain fields unique to that subclass. This reduces the duplication in the schema and simplifies the model in some ways, but based on how hibernate performs queries, often unnecessarily binds many tables and may perform slow queries because it returns too much data or deals with locks of other tables. We have used a few methods to avoid this where possible including using "projection" in HQL or other queries, or trying to simplify queries. We are currently investigating some other methods such as FetchProfiles, more complex reflection, caching and views.

The tDAR query builder DSL is pretty simple and maintains two different functions (a) to help with the generation of queries and maintaining of the FieldQueryParts and FieldGroups; and (b) to allow for the overriding of field analyzers at runtime to allow the incoming data to be analyzed differently from the data already stored in the index.

The tDAR query builder DSL functions as wrapper around Lucene in a different way than the Hibernate Search query builder DSL. Our DSL represents fields and groups as classes and allows them to be combined to create queries using the underlying lucene syntax. Besides basic field queries, more complex field objects exists to represent either "ranged" queries, to handle values with just IDs, objects, or complex query parts. A few examples:

When a file is uploaded to tDAR, it is automatically validated, inspected, processed, and stored on the file-system. The workflow chosen is unique to the type of file being stored, and the type of tDAR resource (Image, Document, etc.) to which the file belongs. A workflow commonly follows these steps:

tDAR's file storage and management model is heavily influenced by the California Digital Library's Micro-Services model. Data is stored on the file-system in a pre-determined structure described as a PairTree filestore https://confluence.ucop.edu/display/Curation/PairTree. The filestore maintains archival copies of all of the data and metadata in tDAR. This organization allows us to map any data stored within the Postgres database that supports the application's web interface with the data stored on the file-system, while also partitioning data on the file-system into manageable chunks Technically, the user interface is driven by the Postgres database and a set of Lucene indexes for search and storing data. The resource IDs (document, data set, etc.) are the keys to the Pairtree store. When a resource is saved or modified, the store is updated, keeping data in sync.. Each branch of the filestore is a folder for each record, "rec/" illustrated in Figure 2, below. Data associated with each tDAR record is stored in a structure inspired by the D-Flat https://confluence.ucop.edu/display/Curation/D-flat convention ensuring a consistent organization of the archival record. Figure 2: tDAR Filestore Visualized

/home/tdar/filestore/36/67/45$ tree

--- rec/

(1) |-- record.2013-02-12--19-44-32.xml

|-- record.2013-03-11--08-00-41.xml

|-- record.2013-03-11--08-01-12.xml

(2) |--- 7134/

(3) |

| --- v1/

(4) |

|-- aa-volume-376-no3.pdf

|-- aa-volume-376-no3.pdf.MD5

(5) |

|-- deriv/

|-- aa-volume-376-no3_lg.jpg

|-- aa-volume-376-no3_md.jpg

|-- aa-volume-376-no3_sm.jpg

|-- aa-volume-376-no3.pdf.txt

|-- log.xml

More

generally a path might look like:

/home/tdar/filestore/resource id/file id/version/

|

Note: the numbered items in the figure above map to the numbered items in the list below. Important terms referring back to parts of the figure are highlighted using a distinct font face.

Each section numbered in Figure 2 represents part of the tDAR Record and parts of the OAIS Model’s Submission Information Package (SIP), Dissemination Information Package (DIP), and Archival Information Package (AIP):

A significant amount of thought and planning has been put into the workflow engine for processing files in tDAR. The underlying goal of the process is to allow for the receipt of files by the web application and allow for off-machine processing as needed. This was initially designed around a RabbitMQ engine, and was working, but disabled as all processing is still done on the server. This is mainly due to the fact that the performance on most processing tasks do not actually require enough time, or enough resources to warrant this process being separated – as tDAR scales, this of course will have to change. The overall process of the workflow engine is described by the following graph:

Each workflow itself, is made up of a number of tasks. These tasks are specified by the workflow, and executed in a specific order: Setup, Pre-Processing, Create Derivatives, Create Archival, Post-Processing, Cleanup, and Logging.

As an example, the workflow for an image follows:

As tDAR's functionality expands, and the number of files and formats is increased, these workflows will need to be improved and increase their flexibility and functionality.

In addition to the workflows associated with the ingest of file, tDAR performs recurring integrity checks on these files to ensure that they have not been corrupted or otherwise altered from their original form at the time of ingest. At the time of ingest, the system records the MD5 checksum of the file in tDAR's metadata database. TDAR then routinely confirms the fixity of these files by comparing a file's current MD5 check against the recorded MD5 value in the tDAR metadata database. Any discrepancies are recorded and reported to Digital Antiquity staff.

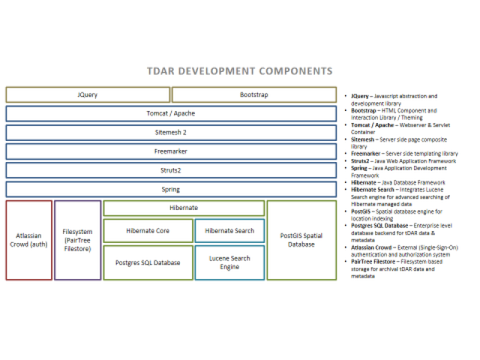

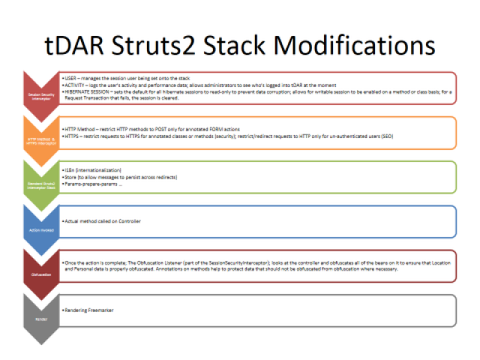

tDAR uses Struts2 to provide support for tDAR's controllers. At the point that it was chosen, struts2 and the convention plugin appeared to provide simplicity to the web layer from both the configuration and data publication modes with less XML configuration and less glue-code, especially with Freemarker being integrated more directly.

We have customized Struts2 in a number of ways, first on the configuration, we've adjusted the default stacks to include additional interceptors, second, we have added layers of security, and third, we've diverged from the defaults Struts2 model in a few ways to help simplify development using a base controller for many shared functions. This controller model has been both helpful and complicated matters in a few places, and in the future we should consider migrating to a more standard struts2 model.

Figure 3:tDAR AbstractPersistableController Class Hierarchy

The AbstractPersistableController is probably the most complex structure within the tDAR controller infrastructure and attempts to manage and simplify CRUD (Create / Update / Delete / View) actions within tDAR by centralizing most of the logic and flow, and allowing stub methods to be overridden by subsequent controllers to adjust the workflow as needed. The controller breaks actions down into the general following process:

While aspects of the AbstractPersistableController are complex, it enables faster development of new controllers and new types due to its predefined structures and interactions with tDAR services and beans. Utilizing the backend controller structure attempts to provide appropriate hooks for actions at the specific controller level and maintains bean-specific code at that controller layer, while generic functions are taken care of at the higher levels. Performance optimizations for hibernate and Hibernate Search can occur centrally – such as interacting with an interceptor for Hibernate Search's indexer to prevent premature indexing can improve performance across the board. The trade-off, of course is that the structure of this class tree does not match struts2 in a number of ways:

Most of the other tDAR controllers are much more standard in function, though they do share the same common interceptor stack.

The other major controller hierarchy is the SearchResultHandler interface and AbstractLookupController hierarchy. The goal of the abstract class and interface are intended to standardize and centralize how tDAR interacts with both search results and the SearchService. The interface provides standard names for parameters supporting searching including for pagination for the end-user interface. It also allows for a standard interface between search controllers and the search service for managing common parameters there such as the query, results, and sorting among others.

Over time, we've begun to use the SearchParameters and ReservedSearchParameters class to assist in the creation and management of queries within the system as well. These helper classes assist in the generation of Boolean search queries by collecting the objects for us without us manually generating groups of fields. The objects themselves were built out of refactoring the AdvancedSearchController to handle generic Boolean searches, but have also helped with simply simplifying the logic.

Figure 4: AbstractLookupController Hierarchy

Due to complexity of actions, a number of controllers have asynchronous actions associated with them. A few are interactive, while most are not. These asynchronous actions are associated with long-running tasks such as indexing, re-indexing, and loading or processing of data. Asynchronous data processing is done through two different models depending on the result.

Older asynchronous requests are done via the execAndWait model whereby the requests are processed and checked-on. This works for many request types, but could be improved as other users cannot see reindexing status.

Newer asynchronous requests use the Spring @Async annotation to run the annotated service method in a separate thread and run it asynchronously. In some cases, such as saving a project or collection, there is no AJAX request tied to this to report back to the user. For more complex actions, such as the bulk upload, an AJAX request allows the user to poll and determine / view the status of a request over its lifetime.

On top of the standard interceptor stack for Struts, we've added a few additional custom interceptors to the tDAR stack.

This interceptor allows us to control which HTTP Methods can be called on a given class or action. This is critical for delete and save actions within tDAR which should always use a POST method for two reasons: (a) because of the complexity of the request exceeding the maximum length for a GET, but also because of how struts2 interacts with the stack here which does not always work properly with a POST. Another logical place for this is on a Login action to ensure that the login is done via POST to ensure that passwords are not included as part of the URL.

The authentication interceptor checks the controller and the @Action to see if specific user access controls are set on them based on what groups the user is part of. The TDARGroup enum represents different groups of users with different associated rights (Admin, editor, basic user, etc.). These groups, are mirrored in the authentication and authorization system (CROWD), and associated with sets of users. This interceptor will check to see if an action has group restrictions and if it does, check the user or force the user to login if they're unauthenticated or they don't belong to the required group.

This interceptor is also used to catch when tDAR's terms of service are updated to require that the user accept the new terms.

In the current model for tDAR, if HTTPS is enabled, a user or search engine may request either the HTTP or HTTPS version of the page (same page, different protocol). To manage search engines and tracking of duplicate pages, and security, we have annotations which allow for two common functions:

The SessionSecurityInterceptor provides a number of shared functions that might, in future, benefit from being broken out into different interceptors.

One of the major functions of the interceptor is to track http requests in a useful format. While we can get some data from Apache or tomcat logs, the interceptor also allows us to add data in a useful format including who made a request, how long the processing took, and to what URL.

We use the OpenSessionInView filter to assist with hibernate session management. One of the security challenges with this filter, however, is that it, by default opens a writable session for Persistable beans. To help manage this and ensure that the combination of this and our params-prepare-params loading model (standard in struts2) doesn't result in objects or changes being persisted or modified in the database layer that shouldn't be, we automatically change the session's permissions to read-only unless otherwise specified by the @WritableSession annotation on the controller. We also catch all exceptions at this interceptor and specifically clear the hibernate session to further secure the database.

The final task associated with the interceptor is obfuscation. Due to our data security model and concerns around protection of latitude and longitude data, we by-default obfuscate all data on the controllers unless the user has the appropriate permissions to view it. The data obfuscation occurs on the controller after the action has executed, but before the result is rendered using a PreResultListener.

tDAR's view layer is built initially on Sitemesh to provide general page templating, and then Freemarker render the actual pages. In the longer term, we likely need to migrate off of sitemesh as it appears to have been abandoned, though in its current form it seems to be working for us. We've extended the Sitemesh parser to allow for a bit more configurability in the templates, allowing it to designate <DIV> regions that contain special content, and switching templates based on the existence of some of these templates. Examples include the sidebar on some of the tDAR pages, like any of the search results or edit pages. In the longer term, we should consider moving to Tiles as a replacement.

While Sitemesh controls which page template to use at a given moment, which in almost every case is layout-bootstrap.dec. Freemarker controls the layout in a bit more depth. Freemarker has a few structural patterns to it, and then a bunch of functional patterns.

tDAR is actively used by two different organizations to provide a digital archive. Each organization has their own identity, and thus their own themes. tDAR is configured to pull header, footer, css, and image data for the specified theme and render it as needed. Most of these calls are declared in layout-bootstrap.dec and reference files in src/main/webapp/includes/themes/.

Beyond this, we allow for localization of some content within tDAR, especially helptext (src/main/webapp/WEB-INF/macros/helptext.ftl ) by defining and centralizing it in its own FTL file and allowing it to be overridden using the theme's version if it exists.

Where possible, we've tried to centralize as many of our shared controls within a single or set of shared macro files (src/main/webapp/WEB-INF/macros). These macros handle the rendering of shared components such as the control for looking up or adding a person, rendering a map or section of an edit page, or common ways of setting up tables or graphs.

We strive for reducing duplication in our FTL files. In past iterations of tDAR this has led to tremendous challenge for managing versions and bugs as issues would be fixed in one form but not another. To this end, we refactored the edit and view pages for resources to reduce as much of the duplication as possible. These pages are a bit unlike the others (and this in itself is arguably a challenge), and almost resemble rails views or controllers as anything else.

Basically, the refactoring still requires a "view.ftl" and "edit.ftl" for each resource type, but, instead of rendering a page, it declares variables, and overrides methods that are used by "edit-template.ftl" and "view-template.ftl" respectively to control the rendering of the template. These changes may include adding a dedicated section for that resource type (such as a listing of the data-table-columns for a data set) or a different file-upload /edit component for a coding sheet.

The quest for balancing duplication of code with complexity can also be seen in two other distinct areas:

Over time, we've refactored these into single methods to solve issues of not fixing certain bugs as they've cropped up consistently, but introduced complexity in the rendering and flow as these methods become overly complex – even as we refactor the methods to simplify them.

Over the past year-and-a-half we've migrated a lot of our code to use the Bootstrap framework as part of a general redesign of the tDAR codebase. Using the framework has meant an overall reduction in the size of our JavaScript, CSS and Freemarker codebase by a significant amount. Bootstrap's common functionality has also meant that we can almost drop the use of JQueryUI and have removed a number of JQuery plugins that are no longer needed. Instead of relying on rendering of all of the Bootstrap controls ourselves, we're also able to take advantage of the struts2-bootstrap plugin which allows us to simply change the struts2 theme on our controls and leverage that instead of creating all of the divs and labels ourselves (something we were doing prior). Over the next year, we hope to remove JQuery UI entirely, if possible.

The tDAR client makes extensive use of the JavaScript language. At a broad level the use of javascript can be broken down into two areas: custom JavaScript written by Digital Antiquity staff; and the inclusion of JavaScript in the form of 3rd party libraries. This section describes the organization of tDAR's JavaScript code, discusses Digital Antiquity's future programming efforts with respect to JavaScript, and briefly touches on concerns and potential pitfalls with our use of JavaScript.

Locally Developed JavaScript

A large portion of JavaScript code is written by Digital Antiquity and this code serves three main purposes: it serves to implement functionality that is not available from 3rd party code; conversely, we write custom code to extend and generalize 3rd party JavaScript so that it can be used in a tDAR context.

As the use of JavaScript increases, so too does the potential for unwanted side-effects. To mitigate these side-effects we are in the process of minimizing our use of the JavaScript by "namespacing" our locally-developed JavaScript. That is, we expose one global object named "TDAR" and provide access to TDAR-specific properties and functions via this global object. We plan to further modularize TDAR's JavaScript in the coming months.

Despite the effort to modularize the tDAR JavaScript code, the following issues remain.

We plan to mitigate the problems above through the use of automatic module loading solutions such as AMD or through the use of Common.js pre-processing solutions.

We are starting to template more and more of the JavaScript output to help manage the display and centralize. We have not yet chosen one of the popular templating languages or frameworks, but instead have been using the templating language that is embedded in the JQuery FileUpload component as it's (a) already embedded and (b) seems to handle what we need at the moment.

Beyond testing, which will be covered elsewhere in this document, one of the future challenges is migrating more of our UI and code to something like Backbone, Knockout, or AngularJS. These frameworks theoretically should further reduce the locally developed custom code and simplify some of the logic within the tDAR application. Two particular areas which would be the best initial test-bed for this sort of work would be the resource-edit pages and the advanced search page.

tDAR might benefit from migrating some of its custom CSS to SASS or LESS over time, though most of it is not pretty simple and straightforward not to necessarily balance out the costs of learning a separate processing language.

There are a few goals to packaging and deploying content for tDAR:

To start, point 3 is contradictory to point's 1 & 2. Finding a balance, however, is critical. We want to make sure that when debugging and writing tDAR the JavaScript and CSS is easy to read and manage, and when dealing with the production version we can figure out what's going on too, but still manage the content.

Where possible, we try and use CDNs to distribute shared tDAR components. This includes: boostrap's CSS & JavaScript, JQuery, and commonly used plugins among other things.

One of the Maven deployment tasks is to minify all of the JavaScript and CSS prior to deployment. This maintains separate files, but it removes whitespace and validates them for us.

We're currently using a separate servlet to combine all our JS and CSS files together. This servlet, however, does seem to produce more errors than we're comfortable with. Over time, we're looking to replace this with a build-time combination.

In the future, we'd like to move to use something like RequireJS to manage dependencies, or Grunt to manage the overall JavaScript and CSS build processes.

It is impossible to maintain a zero-defect application. Over time, we've added a few infrastructure components to help us identify, track, and manage defects.

Initially developed for testing, we've started to track all JavaScript errors on the edit-resources page and well as other pages to help us understand what's broken and how it broke. As the application has become more dependent on JavaScript, this has become more important.

Besides using SLF4J and logging at WARN or ERROR, we are redirecting all ERROR entries to email as well. These entries are sent to the administrator's listserv. Administrators can then see and deal with the issues in near-real time. Over time this may need to be replaced if the size and frequency of trivial errors grow, but it adds equal emphasis to ensure that the administrators solve the underlying issue.

tDAR's growth will ultimately force us to move to running the application in a distributed environment. As we have built tDAR, we've tried to account for this in a number of fashions.

tDAR incorporates a number of different types of testing in the system, unit testing, integration testing, end-to-end automated testing, and user testing.

Unit testing within tDAR is used to test extremely basic functionality within the system that does not require any infrastructure (Database, Spring, Struts, or web interaction). We employ two different frameworks for unit testing:

For basic java tests, we use JUnit and the Surefire Maven plugin to run tests. Tests here include basic functionality around enums, to complex logic tests that are more focused on how a given class works outside of the tDAR environment. Tests of parsers, the filestore, or basic data validation are good examples of this type of test.

Although we use an integration test framework to run them, we do use QUnit for unit testing of JavaScript. This is a newer model for us, and we need to work on code coverage. Some good examples of this have to do with the inheritance interface on the edit pages along with the file upload validation.

Integration testing in tDAR is focused on testing specific services and controllers and their interactions with the underlying system, both database and filesystem. Unlike end-to-end testing, these tests do not actively include the web layer. tDAR uses JUnit and Failsafe to manage integration testing. A couple of test-runners are used to manage our integration tests. All of our integration tests are built off of the SpringJUnit4ClassRunner.

The AbstractIntegrationTestCase provides basic frameworks for running tDAR tests. It is mainly subclassed by tests that run either controller tests via the AbstractControllerITCase tree or general service layer tests.

The MultipleTdarConfigurationTestRunner enables us to re-initialize tDAR's integration or web (end-to-end) tests with different tDAR configurations at test time. This is accomplished by pointing the application at a different tdar.properties with different settings enabled.

Our older web tests are built on top of the AbstractWebTestCase which uses HTMLUnit to interact with a locally running version of tDAR. This enables us to test the entire tDAR stack while sending only HTTP requests back and forth via form submissions and URLs. As it is built off of the AbstractIntegrationTestCase, we still have access to the underlying tDAR database and services via Spring and Hibernate. The one challenge with these tests is that there is no javascript validation or testing.

Our newer web tests tend to use Selenium with the Firefox driver to test tDAR. These are true end-to-end tests and have javascript enabled to completely test the entire interface.

The final aspect of testing is user testing. While all other forms of testing allow us to build confidence about what we are testing, and the Selenium testing allows us to reduce the number of browsers that users have to test with, user acceptance testing remains critical. User testing is performed via a test matrix and set of test scripts that the user follows in various browsers (https://dev.tdar.org/confluence/display/DEV/tDAR+Functional+Testing+Plan ). The results of the tests are effectively acceptance testing for tDAR ahead of a major release.

Beyond the basic validation of the java code, we have a number of tools that run within the build and deploy cycles for tDAR that further validate all non-java tDAR Code.

Our end-to-end automated testing does HTML validation of all pages that are passed to it, JTidy is not well maintained, and thus does not have HTML5 validation, but it does provide strict parsing of all of our emitted HTML for validation errors. Tests will fail if the HTML is invalid.

HTMLUnit has embedded CSS validation of all files. We've limited the CSS validation to only error on tests with locally developed and maintained CSS. This ensures that we're not modifying non-locally developed code.

JavaScript is validated in two different ways – we use the YUICompressor and its embedded JSLint parser at build and deploy time to validate all of our static Javascript. When HTMLUnit requests JSON objects, those are validated using the net.sf.json package's serialization methods. Finally, all JavaScript used by tDAR is implicitly tested via the Selenium web tests that execute all JavaScript and fail on script errors.

After all of our integration tests are run, tDAR uses FindBugs to perform static analysis on the codebase and identify potential issues.

Paired with our test development is Clover which provides code-coverage information for tDAR. Clover allows us to see how well our tests cover the code's execution, and what areas of the code could benefit from more tests. Obviously, 100% test-coverage does not equal 0 bugs, but it does provide a basic level of confidence about the tests.

We recently added checkstyle format checking to the tDAR codebase. It's something that needs additional work at this point and we'll be working through it to clean up major formatting issues as needed. This is lower priority.

Right now we do not have a code-coverage tool for JavaScript, but it's something we hope to add.